These days, when fellow techy sorts find out I work for VMware, they often want to know what I think about

Xen. With yesterday's RedHat PR event containing

a protracted mash note to Xen, and Slashdot

boiling over with the usual speculation, it seems particularly topical today to answer this FAQ. I'd like to especially, super-duper emphasize that this is just me babbling, and has nothing to do with anything officially believed, encouraged, advoctated, etc., by my employer.

For those just tuning in, Xen technically differentiates itself from VMware by the somewhat lugubriously named technique of "paravirtualization." This term refers to co-designing a guest OS along with the virtual machine monitor, to optimize the fit between them. Obviously, this isn't always possible. If you intend to run an OS whose source code is inaccessible, or perhaps doesn't even exist in electronically readable form anymore, paravirtualization is not an option. Paravirtualization constrasts with the "black-box" virtualization practiced by classical VMMs, wherein the OS is carefully kept unaware that the VMM exists, and the VMM in turn has little semantic knowledge about the OS.

Having worked at VMware for a while when I first heard the term, paravirtualization initially struck me as a bit of a hack; i.e., it looks like a way to get some of the advantages of virtual machines, without having to solve some of the

ludicrous problems that the x86 presents for classical VMMs. However, after talking to the L4 team a couple years ago, I have been won over, with some qualifications, to the view that paravirtualization can be a legitimate result of conscious system design. In some ways, paravirtualization shows the way towards a unifying scheme for CPU architectures, OS interfaces, "artificial" virtual machines like Java, etc. After all, what is a UNIX process if not a "virtual machine" with some convenient extra capabilities for writing performant applications?

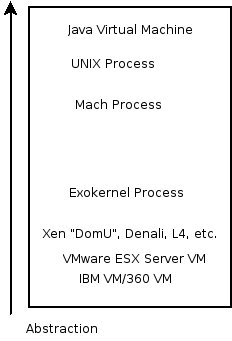

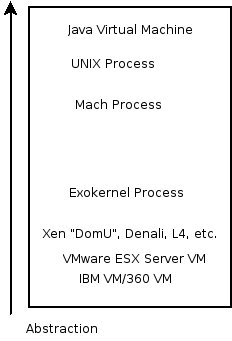

We can classify any software system that wraps underlying hardware along a continuum of levels of abstraction. A purist black-box VMM, which exports a software interface identical to underlying hardware, ala the golden-age IBM VM/360 systems, sits at the lowest level of edge of the universe, while something like a JVM, which exposes no details at all of the underlying hardware, sits at the top.

(Trivia: Note that VMware's ESX Server doesn't quite sit as far near the bottom as the VM/360 machines, because some aspects of the underlying hardware are mutated by the VMware virtualization layer. For instance, the vast diversity of hardware on current PCs are normalized to a basis set of hardware we're comfortable emulating. When easy opportunities to "lightly paravirtualize" the guest, e.g., by using an abstraction of a video or SCSI card, rather than a real hardware model, have arisen, VMware has taken those opportunities. Still, the interface exported by ESX Server is about as close as is practical to that exposed by bare metal.)

(Trivia: Note that VMware's ESX Server doesn't quite sit as far near the bottom as the VM/360 machines, because some aspects of the underlying hardware are mutated by the VMware virtualization layer. For instance, the vast diversity of hardware on current PCs are normalized to a basis set of hardware we're comfortable emulating. When easy opportunities to "lightly paravirtualize" the guest, e.g., by using an abstraction of a video or SCSI card, rather than a real hardware model, have arisen, VMware has taken those opportunities. Still, the interface exported by ESX Server is about as close as is practical to that exposed by bare metal.)Populating the middle ground between JVMs and bare metal, we find a bunch of familiar system-construction paradigms: traditional OS'es, which provide a virtual machine that has high-level semantics (such as files, processes, threads, etc.), but is still machine-language programmable; and microkernels, which provide a slightly less high-level abstraction out of the box. A paravirtualized VMM is simply a different point on this continuum, somewhere between the bare-metal VMM provided by something like

VMware ESX Server, and the higher-level (though still pretty low-level) interface provided by an exokernel.

So, that's Xen in a nutshell. It sits at a different space in the continuum of system design than current VMware products do. It offers some of the advantages of VMware products (unmodified application binaries) without offering others (unmodified system-level binaries). The Xen guys are fond of implying that they'll get around to offering those other advantages, perhaps with some coy references to

VT and

SVM. For various reasons, I think the technical obstacles to achieving parity with VMware's offerings are greater than they realize. Then again, maybe they've started to realize it in the process of slipping Xen 3.0 from August to December. (Not to be too smug; we've slipped releases, too. So has everybody.)

On a personal note, I've had the pleasure of hanging out with various Xen movers and shakers. They're smart people interested in solving problems, and, fairly enough, would like to make a few pounds sterling doing it. They can also really hold their liquor. So, I wish them luck. I've got no problem at all with a little competition. It's good for our customers, good for virtualization as a whole, and keeps my job more interesting. So, bring it on, Xennies! I dare you to make Xen 3.0 as good as you know how.